In this amazing article, we will learn about a very powerful Python llm model called Llama2 and we will also see how we can use it with Python. So stay tuned with this large language model tutorial.

What is Llama 2 model?

Python Llama2 or llama 2 is an open-source LLM(Large language model) launched by Meta (Past Facebook). LLama 2 is the LLM Model that consists of a collection of pre-train and fine-tuned models. The llama 2 model is built upon the old llama model and both models are co-developed by Meta and Microsoft researchers together.

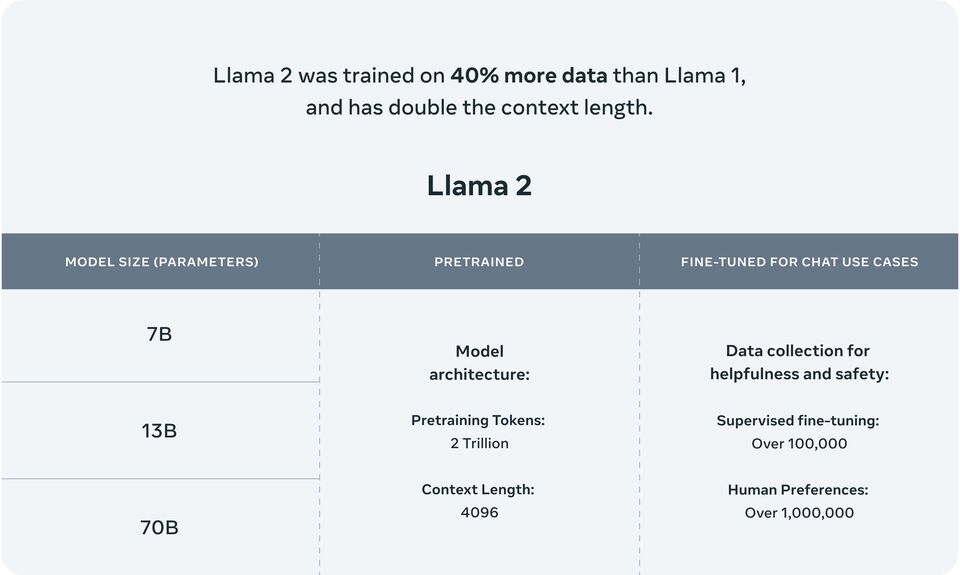

The Llama 2 release introduces a family of pre-trained and fine-tuned LLMs, ranging in scale from 7B to 70B parameters (7B, 13B, 70B). The pre-trained models come with significant improvements over the Llama 1 models, including being trained on 40% more tokens, having a much longer context length (4k tokens), and using grouped-query attention for fast inference of the 70B model!

So basically llama2 model is an LLM model that will help us with most of the tasks related to NLP.

You can check the official website for Llama2 from here.

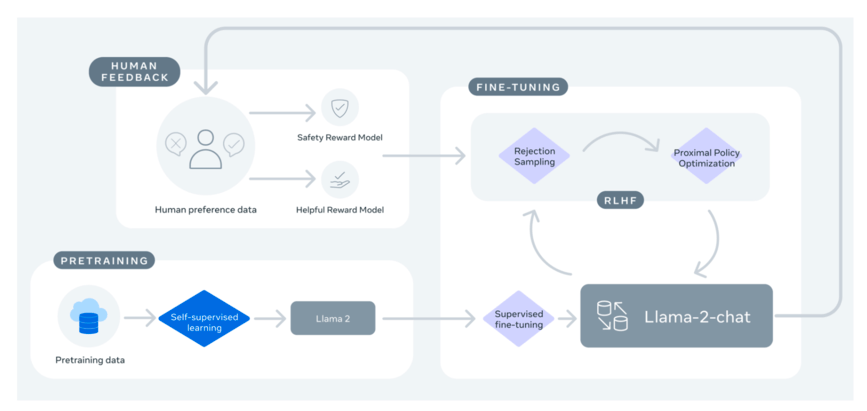

How does Llama 2 work?

We have seen what is Llama 2 and now understand how it works. llama 2, much like its predecessor, the original Llama model, isn’t your run-of-the-mill AI. It’s a dynamic evolution of the Google transformer architecture, but with a dash of brilliance that sets it apart. Picture this: Llama 2 has taken a page from the playbook of some AI legends and come out even stronger.

Here’s the lowdown on Llama 2’s upgrades:

- RMSNorm Pre-normalization: Borrowing a trick from the legendary GPT-3, Llama 2 incorporates RMSNorm pre-normalization.

- SwiGLU Activation Function: Ever heard of Google’s PaLM? Llama 2 certainly has, and it’s brought in the SwiGLU activation function to level up its game.

- Multi-Query Attention: Instead of sticking with the status quo of multi-head attention, Llama 2 goes for multi-query attention, a cutting-edge approach to understanding and processing information.

- Rotary Positional Embeddings (RoPE): Taking inspiration from the trailblazing GPT Neo, Llama 2 introduces rotary positional embeddings to master the art of handling sequences like a pro.

Regarding training, Llama and Llama 2 roll with the AdamW optimizer, the secret sauce of deep learning.

But here’s where Llama2 meta takes a bold leap. It boasts an expanded context length, allowing it to juggle a whopping 4096 tokens compared to the original’s 2048 tokens. And it doesn’t stop there—Llama 2 introduces the concept of grouped-query attention (GQA) in its larger models, a game-changer that propels it into a league of its own.

Is Llama 2 free?

Yes, Meta has done a great job by making the llama 2 models completely free, and open source, and on top of that why also give commercial use license too for everyone so many people can use it to make innovation using llama 2.

Where i can get llama 2 7b?

You can download llama2 7b and other models from their Meta llama 2 official website from here. First, you need to apply for access from Meta which is completely free or you can use Llama 2 hugging face hub to download them.

As we have easily initiated the Llama 2 download from the official website we can start using it. Also, huggingface provides llama2 API that can be directly integrated with your code.

How to run Llama 2 online?

As we all know LLM models are very big in size and require a lot of computing power as GPU, so any normal user cannot access llama2 locally. But don’t you worry we have a solution for the online llama 2 demo.

You can visit Lmsys to access the llama2 online. It will also provide an amazing llama2 UI. llama 2 perplexity is also a good option to test llama 2 70b and code llama that is used to generate programming codes based on prompts and llama2 UI in perplexity has very futuristic too.

As we have all the theory knowledge now it is finally time to start coding and create something innovative using llama2.

How to Run Llama 2 locally?

We will use the most popular library in the NLP world and that is huggingface’s transformers. With transformers, we can access and fine-turn plenty of models. So let’s see how to use Llama 2 in Python.

Let us follow the step-by-step method to run Llama 2 on Python.

Step 1: First, let’s install the necessary Python packages we need.

| pip install transformers accelerate optimum auto-gptq |

Step 2: Let’s import the methods we require.

| from transformers import AutoTokenizer import transformer import torch |

Step 3: Now we have to implement the llama2 tokenizer and model.

| model = “meta-llama/Llama-2-7b-chat-hf” tokenizer = AutoTokenizer.from_pretrained(model) pipeline = transformers.pipeline( “text-generation”, model=model, torch_dtype=torch.float16, device_map=”auto”, ) |

Step 4: We have to use the pipeline we define in Step 3 to generate the result using transformers.

| sequences = pipeline( ‘I liked “Write me a story on llama the cat’, do_sample=True, top_k=10, num_return_sequences=1, eos_token_id=tokenizer.eos_token_id, max_length=200, ) |

Step 5: Lastly, let’s print the results one by one using the loop.

| for seq in sequences: print(f”Result: {seq[‘generated_text’]}”) |

When you run the whole code it will write the entire story for you. Using this code you can perform llama2 chat functions and other tasks.

Conclusion

To conclusion it up, Llama2 seems like this cool and groundbreaking LLM model that could shake things up in the NLP world. I mean, it’s got all these awesome features like RMSNorm pre-normalization, SwiGLU activation thingy, multi-query attention stuff, and those rotary positional embeddings. With all that, Llama2 can handle a whole bunch of NLP tasks like a boss.