Table of Contents

Today we are going to learn about how we can build a large language model from scratch in Python along with all about large language models.

So let’s start by knowing what exactly a large language model is and what architecture they follow to build one.

What is the Large Language Model?

Think of large language models (LLMs) as super-smart computer programs that specialize in understanding and creating human-like text. They use deep learning techniques and transformer models to analyze massive amounts of text data to achieve this. These models, often referred to as neural networks, are inspired by how our own brains process information through networks of interconnected nodes, similar to neurons.

Large language models are also referred to as neural networks (NNs), which are computing systems inspired by the human brain. These neural networks work using a network of nodes that are layered, much like neurons.

LLMs are like the swiss army knives of computers. They can learn all sorts of things, not just languages. For example, they can learn about biology or finance and help with tasks in those areas. To get really good at their jobs, they first learn a bunch of general stuff and then fine-tune their skills to specialize in specific tasks. Think of it like learning the basics of cooking and then becoming a master chef.

How do large language models work?

LLMs are powered by something called “transformer networks.” Think of these as filters that help them understand the context and meaning of words in sentences. These transformers have different layers, each with a specific role, like self-attention layers that decide what parts of the input are important, and feed-forward layers that help generate output based on the input.

Two crucial innovations make transformers especially useful for LLMs. First, there’s “positional encoding,” which helps the model understand the order of words in a sentence without them being in sequence. Second, there’s “self-attention,” which lets the model assign different levels of importance to different input parts. This means the model can focus on what matters most, kind of like how we pay attention to essential details in a story.

History of Large Language Model?

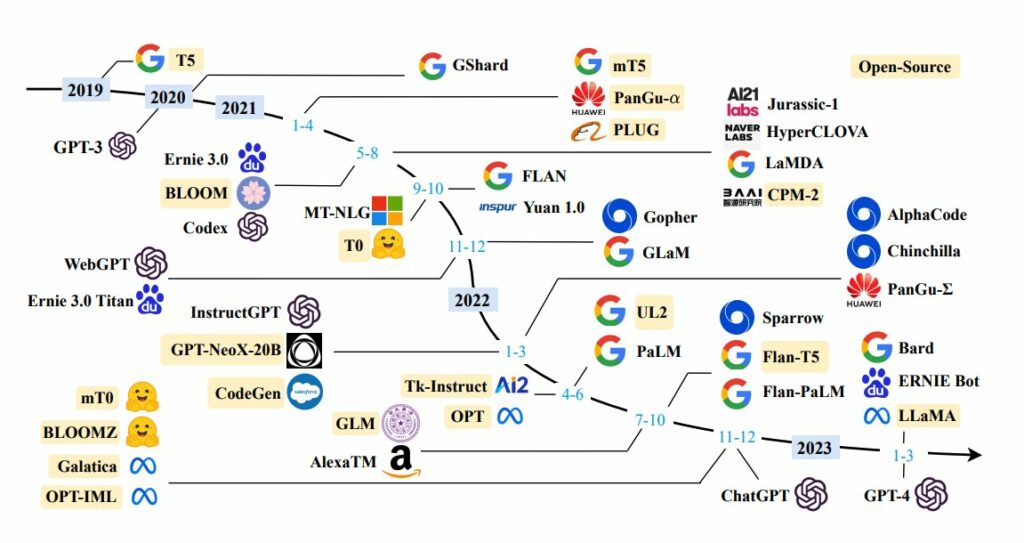

The concept of large language models is not that much new, it can be traced back to the early 1950s and 1960s, as the recent formation of NLP(natural language processing) began. But the word LLM or large language model comes after the invention of transformer models which we learned in the above topic.

Source: Twitter.com

It started when researchers at IBM and Georgetown University designed and developed an automatic translation system that can translate a collection of phrases from the Russian language to English.

But the breakthrough came in the year 2010 and then in 2019 when in 2010 a large number of research started being conducted in the field of artificial neural networks boosting the edge for setting the ground for the development of large language models.

And then 2019 google introduced it BERT Model(bidirectional encoder representations from transformers) which began the race to develop an advanced large language model and after that came the GPT3 model and GPT3.5 also known as ChatGPT that took over the internet like a stome.

So enough of the theory let’s start the coding part now and create your own LLM model from scratch.

Prerequisites for building own LLM Model:

We will require the following prerequisites.

- Python environment

- GPU (For fast training)

- Data

Let’s see how each one can be achieved.

Data Collection for training your own LLM model.

Large language models are very information-hungry, the more data the more smart your LLM model will be. You can use any data collection method like web scraping or you can manually create a text file with all the data you want your LLM model to train on.

Here I’m using the Tinyshakespeare dataset that can be downloaded from here.

Now let’s see how to build a large language model from scratch.

By the way Meta Llama2 is also very good model compre to ChatGPT you can learn more about What is Llama 2 from here.

Train your own LLM Model

To train our own LLM model we will use an amazing Python package called Createllm, as it is still in the early development period but it’s still a potent tool for building your LLM model.

It uses transformer architecture to train custom data LLM models.

Let’s see how easily we can build our own large language model like chatgpt. But let’s first install the createllm package to our Python environment.

Use the following command to install createllm.

pip install createllmExample 1: How to Build a Large Language Model from Scratch

Step 1: Import required functions and methods from the python createllm package.

from createllm import CreateLLMStep 2: Specify the path to our dataset.

path = "data/tinyshakespeare/input.txt"Step 3: Run the model trainer from the createllm method by providing a path to the dataset and the number of iterators to train on, by default it trains on 5000 iterators.

model = CreateLLM.GPTTrainer(path,max_iters=100)

model = model.trainer()Once you run the above code it will start training the LLM model on the given data and once the training is completed it will create a folder called CreateLLMModel in your root folder.

Output:

14.356316 Million Parameters

step 0: train loss 4.6309, val loss 4.6366

Directory 'CreateLLMModel' created.

Model Trained Successfully As you can see our model is trained on our custom data successfully.

Please note that you can increase the number of iterators based on the size of the data.

Now let’s test how our model works in real time. Here also we will use the createllm library to inference our model.

from createllm import CreateLLM

model = CreateLLM.LLMModel("/Modelpath/CreateLLMModel")

print(model.generate("How are you?"))Output:

Im good, hwo ree you.As you can see our training was not that much sufficient as we trained our data on very less iterators.

How to Deploy LLM Model

Many people ask how to deploy the LLM model using python or something like how to use the LLM model in real time so don’t worry we have the solution for.

As we have learned how to build your own LLM now let’s deploy it on the web using python FastAPI library.

Let’s start the code for deployment.

Step 1: Install FastAPI, Uvicorn, and CreateLLM python package in our python variable.

pip install fastapi

pip install uvicorn

pip install createllmStep 2: Now import all of them.

from fastapi import FastAPI

from pydantic import BaseModel

from createllm import CreateLLMStep 3: Create a FastAPI object and class for taking input from the user.

app = FastAPI()

class InputString(BaseModel):

text: strStep 4: Last we will create a route for FastAPI using a function.

@app.post("/inputText")

async def process_string(data: InputString):

processed_text = data.text

model = CreateLLM.LLMModel("CreateLLMModel")

result = model.generate(processed_text)

return {"processed_text": result}Let’s run our code using the python uvicorn library to start our API.

Follow the below command to run the API, please note that this code cannot be run as other Python code like running py files.

uvicorn main:appMake sure your Python file name is main.py

Pretty easy right, Let’s use the curl tool to test our API working and see the results.

Step 5: Test API to fetch the result.

curl -X 'POST' \

'http://127.0.0.1:8000/inputText' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"text": "How are you"

}'The output will be as follows

{

"processed_text": "How are you🙂a5zStl...xeL" /V GJoWl 'w Jarae bv n" &c : -:i🙁hha... 4i! t9 F "t 2aw″oh" TNT)qCO jr DTet")QzT iew\t j0'e0LV-7buA ke3H75Io8cTF rr (T 🙁 U:U XNaaw"

}We can see our output is a little messy as we have trained the model on very few data and iterators. But don’t you think it’s good for the start?

FAQ

What does LLM stand for?

LLM stands for large language model.

What are large language models?

A Large language model is a collection of deep learning models that are trained on a large corpus of data to understand and generate human-like text.